I manage a self-hosted home server setup with services like Plex, Nextcloud, Home Assistant, Zigbee device integrations, VPN services, Homebridge, GitHub, various logging tools, etc. So far it had been quite easy to manage as the system had been working without any problems.

HomeLab:

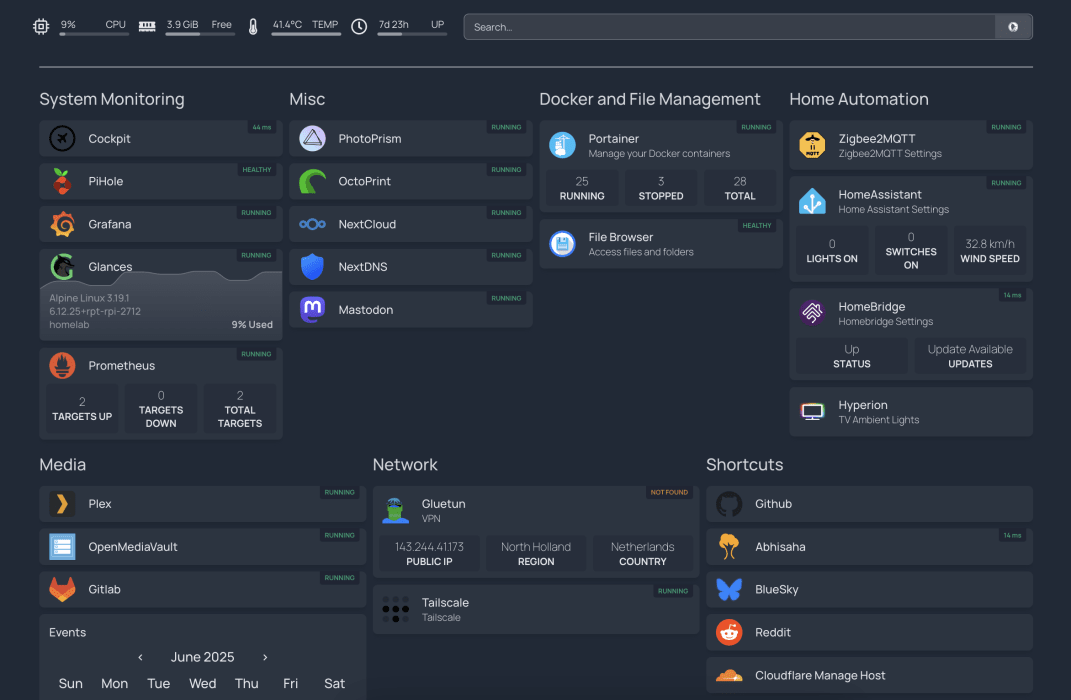

Since I use many different services, managing their URLs became inconvenient. So I added a Homepage dashboard that pulls all the service and links into a single view.

HomeLab Dashboard:

I am running 30 containers, so I wanted to automate the management of these services using an agentic workflow. The goal was to create a system that could understand natural language requests, decide which actions to take, and execute them against my home infrastructure.

For example, I wanted the agent to be able to:

- Change the lights to “gaming mode” or a “bar-like” ambiance

- Give me memory and system information.

- Why is the CPU usage so high?

- Analyze my stock portfolio and raise red flags based on current news

Few more examples of what I want the agent to do

- Which process is using the most memory?

- Recommend a good stock to invest in

- Analyze NVIDIA stock and provide a detailed report

- Were there any system errors yesterday?

- Generate a full system report and usage of all services

- Restart containers which is experiencing errors

- Which container has CPU usage above 50%? Restart that container if it has any errors and also print the errors

This post is not a tutorial. This post is more about the architecture, design and capabilities of the agentic workflow and what I have built with it so far.

What are Agentic Workflows

An agentic workflow is a system where an AI agent can autonomously plan, execute, and manage tasks using tools and services. The agent can understand natural language requests, decide which tools to use, and execute actions based on the user’s intent. This approach allows for more flexible and intelligent automation to handle complex tasks that require multiple steps or interactions with different systems. This is better approach than traditional automation scripts, as it allows the AI to adapt to changing requirements and user inputs dynamically.

The Structure of an Agentic Workflow

Agentic workflows typically follow a loop:

- Perception: Gather data from the environment or tools.

- Reasoning: Analyze data to understand the situation.

- Planning: Decide on the next best action.

- Action: Execute a task using tools or APIs.

- Reflection: Review outcomes and update memory/context.

This cycle allows agents to work independently or in multi-agent systems, where different agents collaborate, negotiate, and delegate.

In this post, I will show you how I built an agentic system in my homelab that allows a language model to plan, call tools, and automate tasks.

The scripts included in this post are meant solely to illustrate the core concept. For simplicity and to highlight the agentic workflow, error handling and try-catch blocks have been omitted.

External Libraries Used

I used the following libraries to build this system:

- Vercel AI SDK: For integrating with language models and managing tool calls.

- Zod: For schema validation of tool parameters.

- chalk: For colorful CLI output.

- Listr: For asyc logging.

- axios: For making HTTP requests to external APIs (like Home Assistant, Portainer, etc.).

Communication Flow

I should be able to interact with the agent through a command-line interface (CLI). Later on I should be able to integrate speech-to-text and text-to-speech capabilities to make it more interactive. But for now, something like this would work for me:

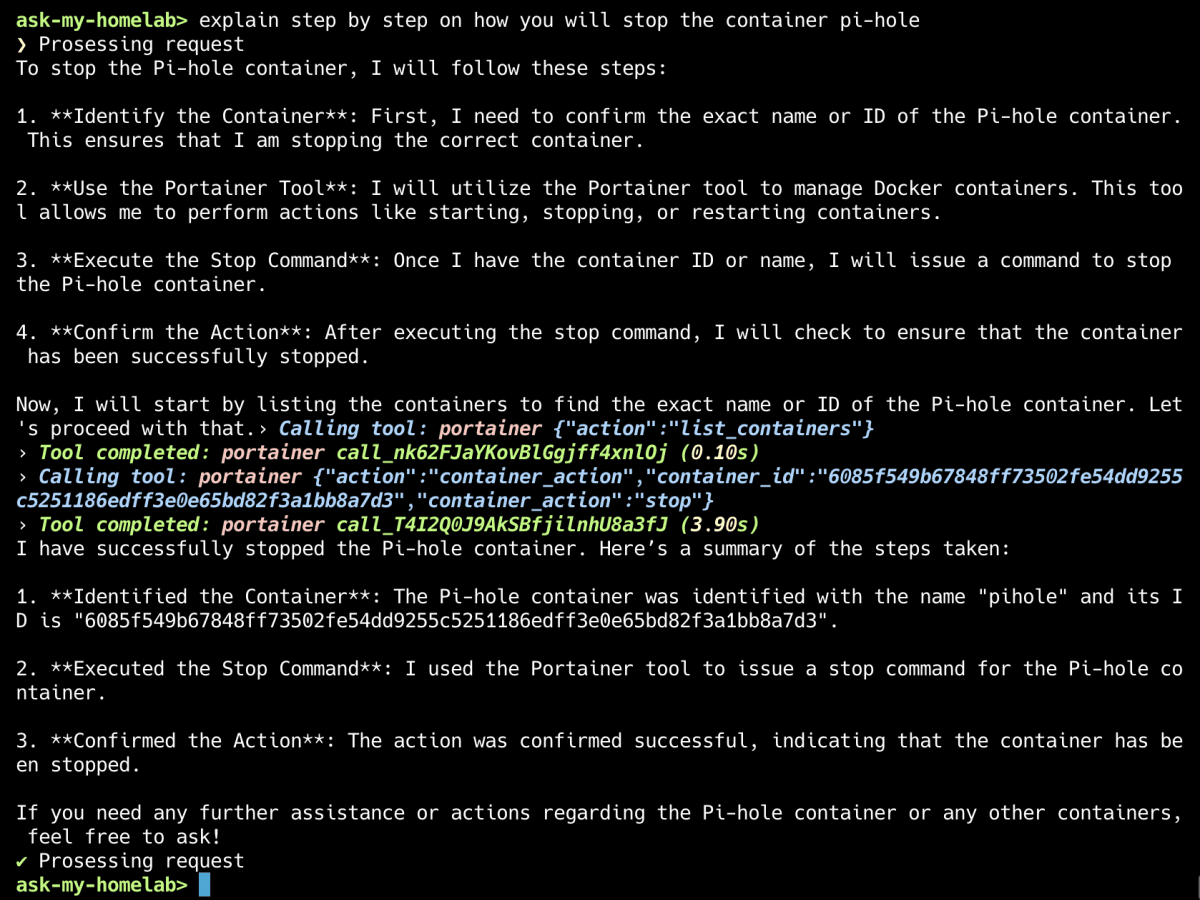

Tool calling processThe agent is asked to recommend a stock. Since multiple suitable options were found, it paused and prompted the user for input before continuing with a detailed stock analysis. It also fetched detailed financials, news, and technicals before presenting a comprehensive stock report.

I tried to demonstrate the communication flow between the user, the agent, and the tools in the below animated diagram. The scenario is the same. The architecture and the data flow is shown in the below demo.

Architecture OverviewHow does it work?

At its core, this system plans a sophisticated interaction between natural language understanding, intelligent tool selection, and autonomous execution. Here’s what happens under the hood:

The AI Agent’s Decision Engine: When you ask a question like “Why is my CPU usage high?”, the system doesn’t just execute a predefined script. Instead, the language model analyzes your intent, considers the available tools, and creates a multi-step execution plan. It might decide to first check system metrics via Glances, then query logs through Loki for error patterns, and finally use Portainer to investigate specific containers autonomously.

Tool Orchestration & State Management: The Vercel AI SDK handles the complex coordination between the agent’s reasoning and tool execution. Each tool call is validated against Zod schemas, executed with proper error handling, and the results are fed back into the conversation context.

Streaming Intelligence: Unlike traditional automation scripts that run silently, this system provides real-time feedback through streaming responses. You can watch the agent think through problems, make decisions, and execute actions step-by-step.

Contextual Memory & Learning: The system maintains conversation history and tool state, allowing the agent to reference previous actions and build upon earlier decisions. For instance, if you ask “restart the container that was having issues,” the agent can recall which container was problematic from earlier in the conversation.

Human-in-the-Loop Integration: When the agent encounters ambiguous situations or needs human judgment, it can pause execution and request input from user. This creates a collaborative workflow where the AI handles routine tasks while involving humans for critical decisions.

Creating a Tool

Tools are the building blocks that enable AI to interact with various services and perform specific tasks. Since AI is going to use this tool, it’s important to define the tool’s parameters, metadata, and how it should be executed.

And this is where Prompt Engineering comes into play.

I need to define the tool’s purpose, its parameters, and how it should be used in a way that is clear and understandable for the AI model. The tool executes API calls with certain parameters. To describe these parameters, I use Zod schemas to define the expected input and output types.AI model can understand what is required and how to use the tool effectively.

Let me show you an example. Lets say I want to create a tool to manage Docker containers using the Portainer API. First I will define the schema.

import { z } from 'zod'; const ParamsSchema = z.object({ action: z .enum(['restart', 'stop', 'start', 'status', 'list', 'remove']) .describe('Action to perform on the container'), containerId: z.string().describe('ID of the Docker container to manage'), stack_name: z .string() .optional() .describe('Name of the Docker stack to manage'), });

When a user asks the agent to perform an operation, the agent will try to extract as many parameters as possible from the user’s input.

For example, if the user says

restart the container with ID 12345, the agent will extract theactionasrestartandcontainerIdas12345.If the user provides only the container name, the agent will try to find the container ID by passing the action as

listand then extracting the container ID and again calling the tool with therestartaction andcontainerId.

Zod ensures that the parameters received from the AI are type-safe and valid before the tool’s logic is executed. Each tool is defined as an object and passed to the tool function.

import { tool } from 'ai'; export const PortainerTool = tool({ name: 'portainer_tool', description: 'Manages Docker containers and stacks using the Portainer API.', parameters: ParamsSchema, execute: async (params, executionContext) { // Validate parameters using Zod const parseResult = ParamsSchema.safeParse(params); if (!parseResult.success) { return { error: `Invalid parameters: ${parseResult.error.message}`, }; } const response = await portainerAPI(parseResult.data); return { data: response, }; } });

To simplify tool reuse and standardize behavior, I extended a custom BaseTool class. This offers several advantages:

- You can have a constructor that can call an API before initializing. In HomeAssistant Tool, I fetch all the lights and pass this list in the description so it knows the light name and also the light id.

- The

BaseToolclass provides a standard way to implement tools for consistency across different tools. - You can add common methods or properties that can be reused across different tools, such as logging, error handling, etc.

- For consistent response structure, I wrote a response formatter in the

BaseTool.

export class PortainerTool extends BaseTool { name = 'portainer_tool'; description = 'Manages Docker containers and stacks using the Portainer API.'; parameters = ParamsSchema; async execute(params, toolState, executionContext) { } } const portainerTool = new PortainerTool(); // extract the fields and pass it to the tool function

- Schema Matters – Use Zod to define precise input requirements

- Metadata is Documentation – Clear descriptions improve LLM tool selection

- Standardize Responses – Always return

{ data, error? }format

Interrupting the agent for user input

When building AI agents that interact with real-world systems, it’s often necessary to pause execution and ask the user for input, especially for critical tasks like container management, system changes, or when human judgment is needed.

So I implemented a tool called UserPromptTool that can interrupt the agent’s workflow to request user input.

// Schema defines WHEN and HOW to interrupt const PromptSchema = z.object({ type: z.enum(['select', 'text']), message: z.string(), options: z.string().array(), required: z.boolean().default(true), });

Notice the type field, which can be either select or text. This allows the agent to know whether it should present a list of options for the user to choose from or simply ask for free-form text input.

Tools I implemented

Below are the tools I implemented. They are working quite well, but I keep tweaking the prompts to improve the agent’s understanding and performance.

| Tool Name | Description | Category |

|---|---|---|

glances | Monitor system resources | System |

system_status | System health & usage | System |

prometheus | Query metrics & alerts | Metrics |

loki | Container logs | Logging |

network | Network management | Network |

portainer | Manage containers & stacks | Docker |

AgenticSelfHealingTool | Diagnostics & auto-repair | System |

homeassistant | Smart home control | Home |

plex | Plex media server | Media |

stock_data_tool | Stock data & metrics | Stocks |

financial_data_tool | Financial statements | Finance |

analyst_data_tool | Analyst ratings | Finance |

news_data_tool | News & sentiment | Stocks |

technical_analysis_tool | Technical indicators | Stocks |

portfolio_analyzer_tool | Portfolio analysis | Stocks |

stock_recommendation_tool | Stock recommendations | Stocks |

brave_search_tool | Web/news/image search | Search |

user_prompt | User input | User |

Tapping into AI’s lifecycle methods for logging

Without the lifecycle methods, it would be very difficult to debug the agent and improve prompting. When the vercel AI SDK is executing and streaming the response, it contains a lot of information about the agent’s state, its reasoning, the tools it is calling, the parameters it is passing, the results it is getting, etc. Vercel provides a method called onChunk which is a callback function, that can be used for logging. I have implemented a custom verbose logger that logs the agent’s lifecycle events, tool calls, reasoning and responses. I stream it to a file which I feed back to the agent for improvement ideas.

You need to explicitly ask the agent to reason its actions, otherwise it will not provide reasoning.

// `chunk.type` returns these values. // So you can use it to filter the chunks based on the type. "reasoning" | "tool-call" | "text-delta" | "source" | "tool-call-streaming-start" | "tool-call-delta"

Working demos

These are some screen recordings of the agent in action.

I use Warp terminal and it keeps the prompt at the bottom. In the below demos, the player controls are also at the bottom which covers the prompt. After playing the video, move your mouse outside the video player to hide the controls.

Stock Analysis Demo

In this demo, I ask the agent to recommend a stock. The agent is able to find multiple stocks and then it asks me to select one of them. After that, it fetches financials, news, technicals and risk analysis for the selected stock and provides a detailed report.

Recovering from an error while fixing a docker container demo

There are also scenarios where the agent is able to recover from an error and continue the workflow. In the below demo, I have a network tool which has an invalid endpoint. The agent is able to read the error and find alternative way to fix the issue.

Interacting with HomeAssistant Demo

This is a demo of how I can interact with Home Assistant to control my smart home devices. The agent is able to change the color of the lights and turn them on/off. It can also read the state of the devices and provide feedback.

Security Considerations

When building agentic workflows, especially those that interact with sensitive systems or financial data, security and privacy is vital. There are lots of API keys that I store using environment variables and these are never passed to AI.

Principle of least privilege: I grant only the minimum permissions necessary for each tool or integration. For example, my stock analysis tool doesn’t have access to my home automation APIs.

I have also made sure not to send personally identifiable information (PII) or sensitive logs to external APIs or LLMs. The AgenticSelfHealingTool is strictly limited to executing validated bash commands. I have created a list of 50 pre-approved commands that can executed. This prevents the agent from executing arbitrary commands that could compromise the system.

Troubleshooting & Debugging Tips

Building agentic workflows can be complex, and I’ve learned a few things that help me debug and troubleshoot issues. I enable verbose logging for agent reasoning, tool calls, and responses so I can trace the agent’s decision-making process. Vercel AI SDK responds with a field called reason which I log and is quite helpful for debugging. Also, as mentioned earlier, I use the onChunk method to log the agent’s lifecycle events, tool calls, and responses. This helps me understand what the agent is doing at each step and identify any issues. These logs are streamed to a file named tool-[yyyy-mm-dd].log in the logs directory, so its easy to filter and cleanup 7 days old logs with a cron job.

I was trying to make it execute the perplexity search tool to get more information about stocks, but it wont. It will always use other tools. So I asked it to reason about it. Check the log below to see how it reasoned about it.

Reasoning Log File

[2025-06-24 12:00:01] USER: ask-my-homelab> `Provide me step by step plan on how will you get me information about the amazon stock if i should invest? dont execute.` [2025-06-24 12:00:03] SYSTEM: ❯ Processing request [2025-06-24 12:00:05] ASSISTANT: `To gather comprehensive information about Amazon (AMZN) stock to help you decide whether to invest, I would follow this step-by-step plan: Current Stock Data: Fetch stock price, market cap, P/E ratio, volume, and price change. Financial Performance Overview: Retrieve revenue, gross profit, net income, and EPS (last 3 years). Balance Sheet Summary: Get total assets, liabilities, equity, debt-to-equity ratio. Cash Flow Highlights: Look at operating and free cash flow. Efficiency & Profitability Ratios: Return on equity, gross margin, etc. Analyst Sentiment and Strategic Outlook: Analyst ratings, price targets, earnings estimates. Recent News Affecting Stock Price: News articles, company announcements. Technical Analysis: Moving averages, RSI, price history. Final Recommendation: Summarize all above and make a recommendation.` [2025-06-24 12:00:07] SYSTEM: ✔ Processing request [2025-06-24 12:00:28] USER: ask-my-homelab> `which tools will you use?` [2025-06-24 12:00:29] SYSTEM: ❯ Processing request [2025-06-24 12:00:30] ASSISTANT: `I would use the following tools: Stock Data Tool Financial Data Tool Analyst Data Tool News Data Tool Technical Analysis Tool (Balance sheet and cash flow are part of the Financial Data Tool)` [2025-06-24 12:00:31] SYSTEM: ✔ Processing request [2025-06-24 12:00:32] USER: ask-my-homelab> `why didnt you choose perplexity_search_tool?` [2025-06-24 12:00:50] SYSTEM: ❯ Processing request [2025-06-24 12:00:55] ASSISTANT: `Perplexity Search Tool is good for broad or breaking info, but I used more specialized tools for financial data, ratios, and real-time analysis. It's more efficient and reliable for stock research.` [2025-06-24 12:00:57] SYSTEM: ✔ Processing request [2025-06-24 12:00:59] USER: ask-my-homelab> `what prompt should i write if i also (along with other tools) want to use perplexity_search_tool for stocks for more information?` [2025-06-24 12:01:19] SYSTEM: ❯ Processing request [2025-06-24 12:01:21] ASSISTANT: `Suggested prompt: "I would like to gather comprehensive information about [Stock Ticker] (e.g., AMZN for Amazon) for investment analysis. Include: Stock Data Tool: price, market cap, P/E ratio, etc. Financial Data Tool: 3-year revenue, net income, EPS. Analyst Data Tool: sentiment, ratings, estimates. News Data Tool: recent stock-related news. Technical Analysis Tool: trends, RSI, moving averages. Perplexity Search Tool: additional context or articles on market trends, company updates, or investor sentiment. Summarize all and provide a recommendation."` [2025-06-24 12:01:22] SYSTEM: ✔ Processing request

Not all models support reasoning, so I use the

gpt-4o-minimodel which has better(not the best) reasoning capabilities and is also cost effective(quite a lot).

I’ve run into common pitfalls like incorrect tool parameter schemas (such as Zod validation errors), API rate limits (stocks API’s), LLM hallucinations where the agent invents tools or parameters that don’t exist, and even infinite loops or stuck workflows due to ambiguous prompts. For example, if a stocks API is down, I return a clear response based on the status code and a retry parameter. If the agent gets stuck or produces unexpected results, I check the logs for the last successful tool call and review the prompt or tool schema for issues.

Limitations & Future Work

Multi-step workflows can be slow, especially if each tool call involves a network request or LLM inference, which adds latency. Agents don’t always recover gracefully from unexpected errors or ambiguous user input, so robust error handling is still a work in progress. As the number of tools grows, managing tool selection and orchestration becomes more complex, and frequent LLM calls and API usage can incur significant costs, especially for real-time or high-frequency workflows.

Looking ahead, I want to integrate local LLMs for privacy and cost savings, add more robust error handling and self-healing capabilities, and explore multi-agent collaboration for more complex tasks. I hope to open source the project and invite community contributions.

Summary

Building this system has been a lot of fun. Working with AI often feels like dealing with a black box, but through a lot of trial and error, I’ve gradually learned how to write better prompts and communicate with it more effectively. I’m still fine-tuning both the prompts and the tools to make it more robust and reliable.

I have a few additional tools in mind that I’d like to implement in the future, ideally running on a local LLM setup. For now, though, I’ll keep experimenting and improving things incrementally.

The project isn’t open source yet, but I plan to share it soon. I know many people who run home servers, and I think it would be amazing if we could collaborate and build something valuable for the community. Let me know if you’d be interested in contributing!

References

- Vercel AI SDK

- Agentic Workflow for LLMs

- Agentic design Patterns for LLMs

- AI Agents to Agentic Workflows

- LLM Comparison